Despite Progress, Researchers Find More Potential Discrimination in Facebook Ads

A call for more transparency about employment ad images and their delivery

In 2019, following several lawsuits, Facebook no longer allowed employers to target specific ages, genders, or zip codes when advertising for open positions.

The move was part of a series of actions the platform has taken in recent years to reduce discrimination in advertising that appears there. Despite the changes, new research from the Princeton School of Public and International Affairs has found a new source of potential discrimination in employment advertising on Facebook.

Aleksandra Korolova, an assistant professor of computer science and public affairs, and Varun Nagaraj Rao, a graduate student in the Department of Computer Science, examined a new means for discrimination in job advertisements on Meta, through advertisers featuring images of people that overrepresented or excluded certain demographics and Meta amplifying it through their algorithm. The pair are based in the Center for Information Technology Policy, one of SPIA's centers and programs.

They discovered that it was prevalent for the advertisers they studied to use images matching the gender stereotype of the job they were hiring for — a fact that becomes more concerning when considering that Facebook's ad delivery algorithm can skew who ultimately sees ads solely based on the demographics of the person in the ad.

Their research, presented in June at the 2023 ACM Conference on Fairness, Accountability, and Transparency in Chicago, argues that the use of such images effectively targets or excludes jobseekers by gender.

“We identify that selective image use is indeed happening for some professions, and just knowing that it's happening is enough to draw attention to this issue so that we can begin to understand how this can be addressed,” says Korolova, whose research focuses on the societal impacts of algorithms and machine learning.

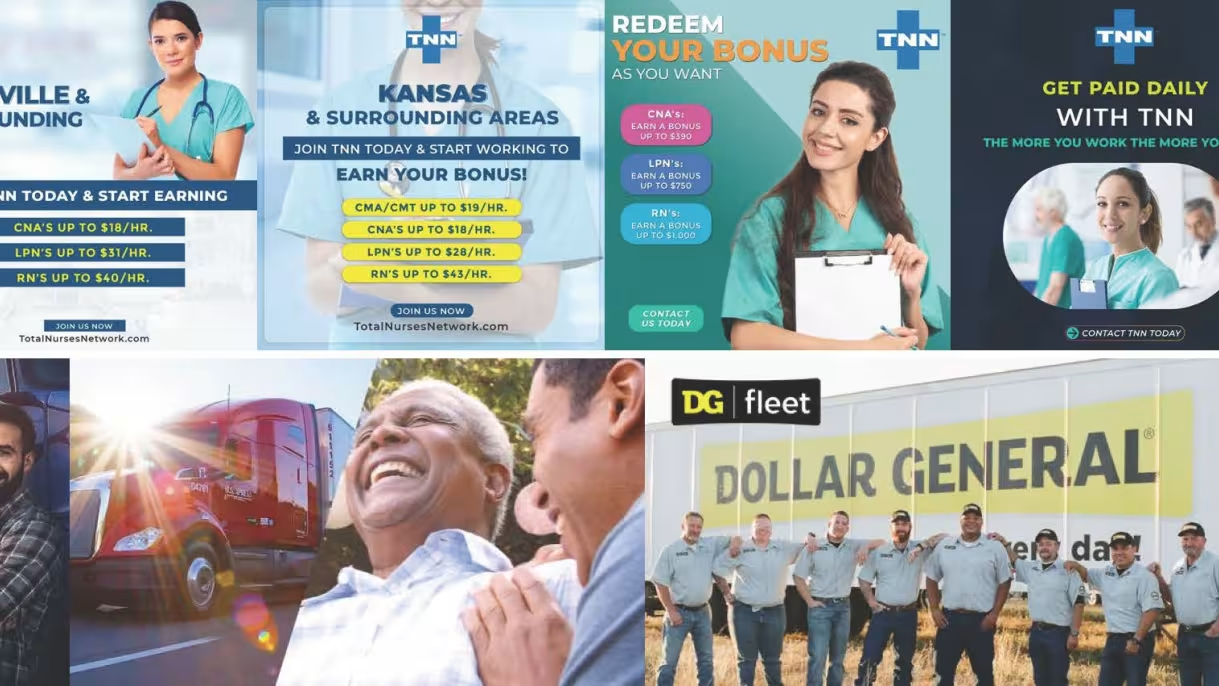

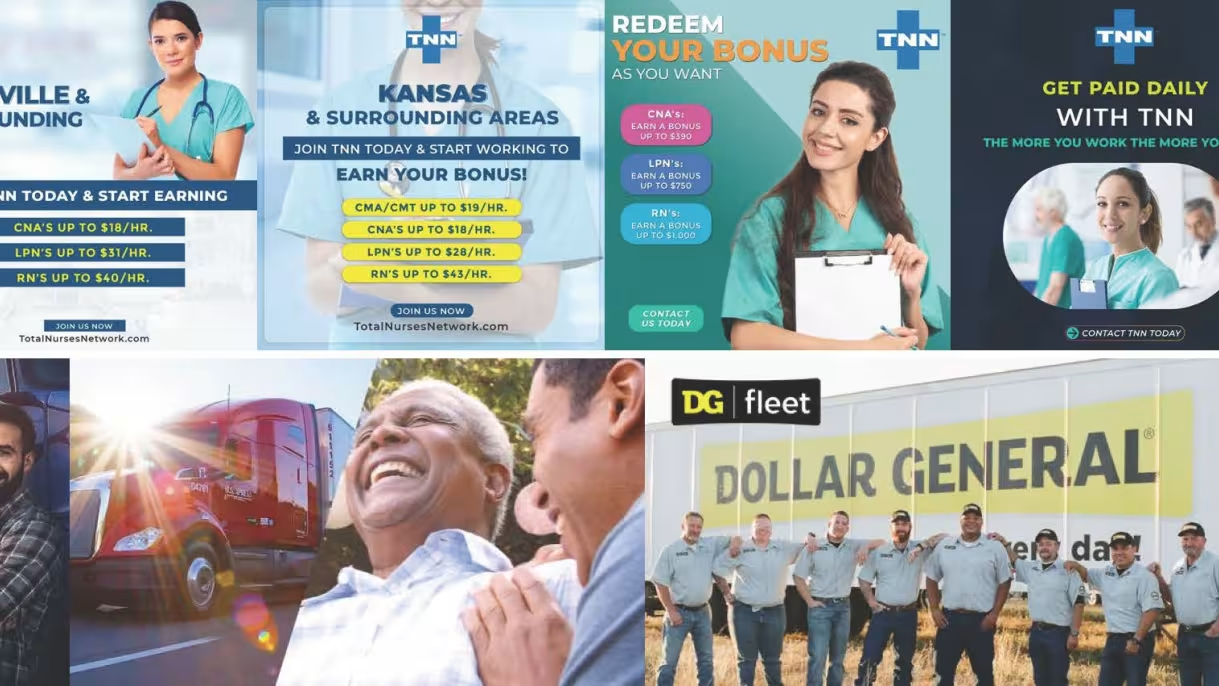

Korolova and Nagaraj Rao culled images from Facebook’s Ad Library to conduct a comprehensive study of gendered image selection used in employment ads for two professions, truck drivers and nurses. A large percentage of truck driver and nurse advertisers predominantly selected images containing people of the profession’s stereotypical gender, depicting men for truck driving jobs and women for nursing positions.

Across all of their ad campaigns that ran on Facebook in January 2023 (excluding advertisements that did not contain images of people), 57% of the 159 advertisers for truck driving jobs depicted only men as truck drivers and 42% of the 259 advertisers for nursing jobs depicted only women as nurses. Additionally, among advertisers that put deliberate effort into image choice in their campaigns — that is, those that used at least 15 distinct images in their campaigns, at least 5 of which contained people — 83% of the distinct images in truck-driving ads depicted men; for the nurses, 73% of the distinct images depicted women.

On the other hand, when looking at a wider variety of occupations, the researchers found that some advertisers appeared to select images in order to promote gender and racial diversity among their future workforce.

Both cases underscore the influence of images on people's decision-making, including whether they apply for certain jobs, as demonstrated by previous research cited in the article. “Images influence who applies to the job,” Nagaraj Rao says, “and we’ve quoted a few social science theories in our paper that draw evidence to that. Case law has also shown that images of people from the majority demographic depicted in housing ads discourages minorities from applying.”

Korolova and Nagaraj Rao also build on previous research that has shown that because of Facebook’s algorithms, ads on the platform can be disproportionately shown to users similar to those pictured in the ad.

Ads are big business for Facebook’s parent company, Meta, which counts on them to supply the bulk of its revenue. In 2022, about 97% of its $114 billion in earnings came from advertising. Korolova believes such heavy reliance on advertising creates an inherent obstacle for Facebook to address discrimination in its algorithm and overall advertising product.

“I think legislation and settlements have contributed to Facebook making a lot of progress in addressing aspects of discrimination,” she says, “but the reason why it’s taken so long to identify the problems, come up with solutions, and implement those solutions is this financial incentive not to understand that a problem may exist.”

That’s why the paper’s central recommendation is to enhance transparency about advertisers’ practices and the company’s algorithm or disable ad delivery optimization for employment ads. “I’m hopeful that this will bring about positive change,” Nagaraj Rao says, “not just for the platform, but for its users and the general public.”

The optimism stems from two factors. Facebook currently discloses certain additional data for ads concerning social issues, elections and politics, and concurrent research by Korolova, Basileal Imana, who is now a postdoctoral research associate at Princeton’s Center for Information Technology Policy, and John Heidemann has proposed a feasible approach to achieve this transparency.

“Such changes, which could help bring positive momentum to addressing these problems, are relatively easy to implement,” Korolova says. “My hope is that our research providing quantitative evidence that this is an actual problem will draw the attention of the platform and of regulators and that, therefore, this work will eventually effect positive change.”