The machine learning techniques scientists use to predict outcomes from large datasets may fall short when it comes to projecting the outcomes of people’s lives, according to a mass collaborative study led by researchers at Princeton University.

Published by 112 co-authors in the Proceedings of the National Academy of Sciences, the results suggest that sociologists and data scientists should use caution in predictive modeling, especially in the criminal justice system and social programs.

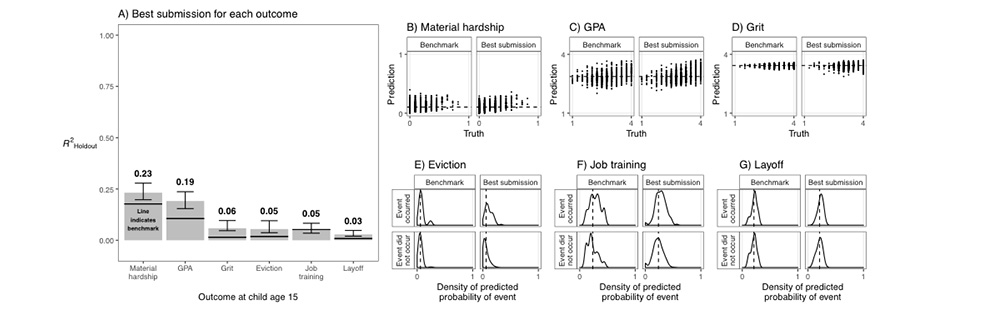

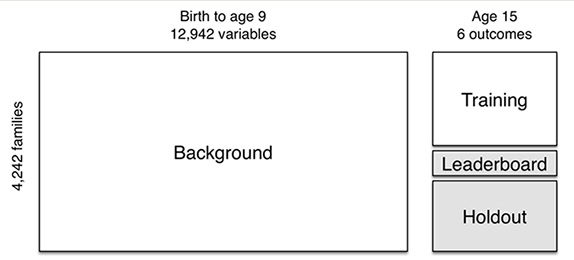

One hundred and sixty research teams of data and social scientists built statistical and machine-learning models to predict six life outcomes for children, parents, and households. Even after using a state-of-the-art modeling and a high-quality dataset containing 13,000 data points about more than 4,000 families, the best AI predictive models were not very accurate.

“Here’s a setting where we have hundreds of participants and a rich dataset, and even the best AI results are still not accurate,” said study co-lead author Matt Salganik, professor of sociology at Princeton and interim director of the Center for Information Technology Policy, a joint center of the School of Engineering and Applied Science and the Woodrow Wilson School of Public and International Affairs.

“These results show us that machine learning isn’t magic; there are clearly other factors at play when it comes to predicting the life course,” he said. “The study also shows us that we have so much to learn, and mass collaborations like this are hugely important to the research community.”

The study did, however, reveal the benefits of bringing together experts from across disciplines in a mass-collaboration setting, Salganik said. In many cases, simpler models outperformed more complicated techniques, and teams with more accurate scoring models came from uncommon disciplines — like politics, where research on disadvantaged communities is limited.

Salganik said the project was inspired by Wikipedia, one of the world’s first mass collaborations, which was created in 2001 as a shared encyclopedia. He pondered what other scientific problems could be solved through a new form of collaboration, and that’s when he joined forces with Sara McLanahan, the William S. Tod Professor of Sociology and Public Affairs at Princeton, as well as Princeton graduate students Ian Lundberg and Alex Kindel, both in the Department of Sociology.

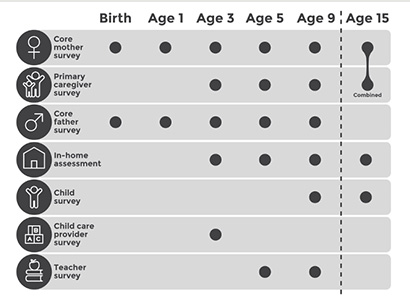

McLanahan is principal investigator of the Fragile Families and Child Wellbeing Study based at Princeton and Columbia University, which has been studying a cohort of about 5,000 children born in large American cities between 1998 and 2000, with an oversampling of children born to unmarried parents. The longitudinal study was designed to understand the lives of children born into unmarried families.

Through surveys collected in six waves (when the child was born and then when the child reached ages 1, 3, 5, 9 and 15), the study has captured millions of data points on children and their families. Another wave will be captured at age 22.

At the time the researchers designed the challenge, data from age 15 (which the researchers call in the paper the “hold-out data) had not yet been made publicly available. This created an opportunity to ask other scientists to predict life outcomes of the people in the study through a mass collaboration.

“When we began, I really didn’t know what a mass collaboration was, but I knew it would be a good idea to introduce our data to a new group of researchers: data scientists,” McLanahan said.

“The results were eye-opening,” she said. “Either luck plays a major role in people’s lives, or our theories as social scientists are missing some important variables. It’s too early at this point to know for sure.”

The co-organizers received 457 applications from 68 institutions from around the world, including from several teams based at Princeton.

Using the Fragile Families data, participants were asked to predict one or more of the six life outcomes at age 15. These included child grade point average (GPA); child grit; household eviction; household material hardship; primary caregiver layoff; and primary caregiver participation in job training.

The challenge was based around the common task method, a research design used frequently in computer science but not in the social sciences. This method releases some but not all of the data, allowing people to use whatever technique they want to determine outcomes. The goal is to accurately predict the hold-out data, no matter how fancy a technique it takes to get there.

Claudia Roberts, a Princeton graduate student studying computer science, tested GPA predictions in a machine learning course taught by Barbara Engelhardt, associate professor of computer science. In the first phase, Roberts trained 200 models using different algorithms. The coding effort was significant and she focused solely on building the best models possible. “As computer scientists, we often times just care about optimizing for prediction accuracy,” Roberts said.

Roberts trimmed the feature set from 13,000 to 1,000 for her model. She did this after Salganik and Lundberg challenged her to look at the data as a social scientist — going through all of the survey questions manually. “Social scientists aren’t afraid of doing manual work and taking the time to truly understand their data. I ran many models, and in the end, I used an approach inspired by social science to prune down my set of features to those most relevant for the task.”

Roberts said the exercise was a good reminder of how complex humans are, which may be hard for machine learning to model. “We want these machine learning models to unearth patterns in massive datasets that we, as humans, don’t have the bandwidth or ability to detect. But you can’t just apply some algorithm blindly in hopes of answering some of society’s most pressing questions. It’s not that black and white.”

Erik H. Wang, a Ph.D. student in politics department at Princeton, had a similar experience with the challenge. His team made the best statistical prediction of material hardship among all the participating submissions.

Initially, Wang and his team found many questions unanswered by the survey respondents, making it difficult to locate meaningful variables for prediction. They combined conventional imputation techniques with a method called LASSO to arrive at 339 variables important to material hardship. From there, they ran LASSO again, which gave them a more accurate prediction of the child’s material hardship at age 15.

Wang and his team made two observations from the results: Answers from mothers were more helpful in predicting material hardship, and past outcomes are good at predicting future ones. These are hardly definitive or causal though, Wang said; they are basically just correlations.

“Reproducibility is extremely important. And reproducibility of machine learning solutions requires one to follow specific protocols. Another lesson learned from this exercise: For human life course outcomes, machine learning can only take you so far,” Wang said.

Greg Gundersen, a graduate student in computer science, experienced another issue: locating the data points that were most predictive of outcomes. At the time, users had to scroll through dozens of PDFs to locate the important question and answer. For example, Gundersen’s model told him that the most predictive variable for eviction was “m4a3.” Finding the meaning of this variable required digging through PDFs of the original questionnaires to learn what it really meant, which was: “How many months ago did he/she stop living with you (most of the time)?”

So, Gundersen, who worked as a web developer before coming to Princeton, wrote a small script to scrape the PDFs, extracting the metadata about the variable names. He then took these metadata and hosted them on a small web application searchable by keyword. Gundersen’s work inspired the Fragile Families team, and a more developed version of his website is now available for future researchers.

“The outcomes this challenge produced are incredible,” Salganik said. “We now can create these simulated mass collaborations by reusing people’s code and extracting their techniques to look at different outcomes, all of which will help us get closer to understanding the variability across families.”

The team is currently applying for grants to continue research in this area, and they also have published 12 of the teams’ results in a special issue of a journal called Socius, a new open-access journal from the American Sociological Association. In order to support additional research in this area, all the submissions to the Challenge — code, predictions and narrative explanations — are publicly available.

The study was supported by the Russell Sage Foundation, the National Science Foundation (grant no. 1761810), and the Eunice Kennedy Shriver National Institute of Child Health (NICHD) and Human Development (grant no. P2-CHD047879).

Funding for the Fragile Families and Child Wellbeing Study was provided by NICHD (grant nos. R01-HD36916, R01-HD39135) and a consortium of private foundations including the Robert Wood Johnson Foundation.

The paper, “Measuring the predictability of life outcomes with a scientific mass collaboration,” was published on March 30 by PNAS.